Apache Pig Tutorial

Pig is a platform for analyzing large data sets that consists of a high-level language for expressing data analysis programs, coupled with infrastructure for evaluating these programs. The salient property of Pig programs is that their structure is amenable to substantial parallelization, which in turns enables them to handle very large data sets. Defined by apache

Requirements for Pig

have your Hadoop system setup. Because Pig is not meant to run alone but in coordination with HDFS/MapReduce.

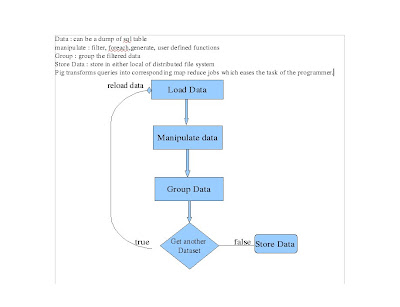

General WorkFlow of Pig is depicted in the workFlow image.

First step invloves loading data . Data can be logs of sql dumps or text files. The second phase involves manipulation of data like filtering, using foreach, distinct or any user defined functions. The third step involves grouping the data. And the final stage involves writing the data into the dfs or repeating the step if another dataset arrives.

Installation of Hadoop is required for Pig.Make sure we have our namenode and tasktracker set in hadoop configuration files. These configurations are necessary because Pig has to connect to the HDFS namenode and JobTracker node.

Running pig jobs in a cluster

Copy the sample dump to the DFS

bin/hadoop fs -copyFromLocal /home/hadoop/passwd .

In my case I am using a dump of Passwd from /etc/passwd

Edit the pig.properties to mention cluster = nameofJobTracker(Map/Reduce)

Edit /bin/Pig.sh file to include the following line

export JAVA_HOME =path to your java_home

export PIG_CLASSPATH=pathto hadoop/conf

Now run the pig command from the pig installation directory

/bin/pig

this should start the namenode and tasktracker of hadoop

If not then we have problems in the configurations. check configuration settings.

Now run your queries, and finally dump the output .

Success !

No comments:

Post a Comment